Large Language Model (LLM) - Advanced setting

Advance setting available for LLMs

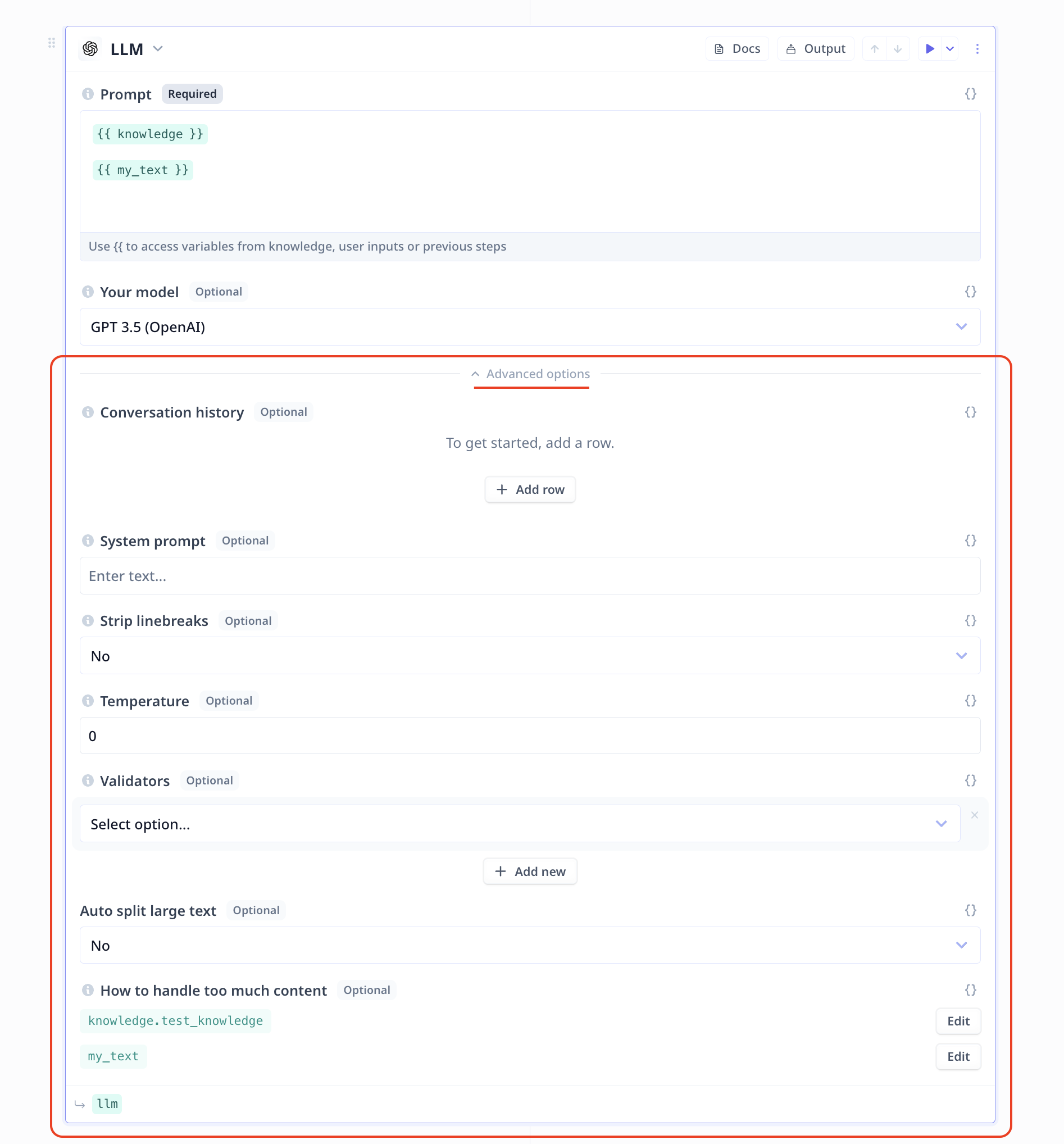

There is a lot more to LLM that the prompt and the model. Click on “Advanced options” on the LLM step and you can

- Add conversation history: to provide context and specify roles for a conversation with the LLM

- Add a system prompt: to provide information on who the LLM is or what role it plays and the general expectation

- Set parameters such as Temperature, validators and text preprocessing

- Choose the best way of handling large amount of data when you need to provide large context for the LLM to work

Conversation history

Click on + Add row and you can add lines of conversation taking place between a “user” and “ai”.

The only acceptable roles are “ai” and “user”.

Conversation history is useful in conversational agents and help AI to know more about the situation. Such a history can make a more personalized experience as well. For example “Hello, my name is Sam” is likely to get a response such as “Hello Sam - How can I help you?“.

System prompt

A system prompt is composed of notes, instruction and guides normally guiding AI to assume a certain role,

or to follow a specific format, or limitations.

For instance You are an expert on the solar system. Answer the following questions in a concise and informative manner

Strip line-breaks

This is a text pre-processing parameter. If set to yes all the new lines will be removed from the provided prompt.

This might be handy when the prompt is slightly larger than the context capacity of the selected model.

Temperature

Temperature is a hyperparameter, ranging in (0,1), that affects the randomness (sometimes referred to as creativity) of the LLMs’ response. Higher randomness/creativity/diversity is expected of higher temperature values. However, responses might also lose the right context.

In the next two pages, we will explain about

Was this page helpful?